How Body Scanning Became the Latest Health Club Must-Have

Finally, a New Year's resolution you can keep.

by

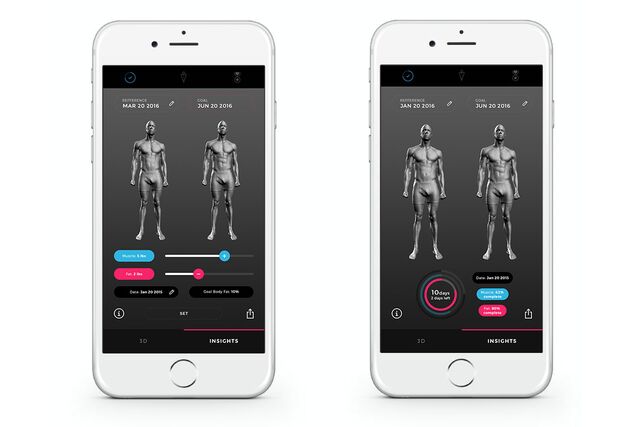

Screenshots from the Naked app

Source: Naked

Walk into David Barton's new gym in Manhattan, TMPL, and you will be greeted by an array of high-tech fitness options—fingerprint scanners, giant screens with lifelike landscapes behind the Spin instructors, and a saltwater pool, all bathed in his trademark recessed LED lighting. But the real game-changing gadget here is not on the weight room floor. It's a Styku 3D body scanner, tucked away in a room near the showers that's next to a minibar serving protein shakes.

If history is any guide, next week millions of people will make a New Year's resolution to go to one of the 180,000 gyms across the globe in an annual, usually ineffective, effort to lose a few pounds. The primary reason for this failure, according to the experts I spoke to, is that checking your weight is a misguided, demoralizing way to gauge overall health. "Many people are focused on the scale," said Mark de Gorter, chief executive officer of Workout Anytime. "But in doing so, they lose the bigger picture of transforming the body."

This could be you: a screen shot from the Naked app.

Source: Naked

Fitness gurus have long complained that the public's myopic focus on weight is counterproductive. Muscle weighs more than fat, after all, and because fat takes up 22 percent more space than muscle, the real measure should be volume. As you lose fat, you literally shrink, a fact that you can feel in the fit of your clothes. But it's hard to be objective when the scale is still creaking beneath your feet.

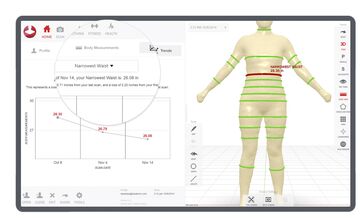

Enter the body scanner, which allows you to visualize your muscle gain and see, in three dimensions, how you are losing fat—and where. Companies such as Stykuand Fit3D, which is available in select Equinox gyms, use a powerful camera, housed in an aluminum base about the size of a kid's tee-ball stand, to extract millions of data points in fewer than 30 seconds. The machine takes the surface measurements of your waist, chest, and arms and then assembles a 3D model that can be rotated, panned, and zoomed from over 600 infrared images.

A Growing Trend

In the last year, health club execs such as De Gorter have discovered that the technology is one of the most effective ways to attract and retain clients. After testing it out at four of her clubs, Diana Williams, who founded Fernwood Fitness in Melbourne 26 years ago and now has 70 franchises across the continent, was so impressed she is rolling it out to her full network. "We use it as a selling tool but more as a retention tool," she said. "A measurement is just a number. But a visual image of what they look like, rather than their imagination, is much more motivating."

Because it converts these measurements to a metric that people can understand, it also makes for easy before-and-after comparisons, said Raj Sareen, CEO of Los Angeles-based Styku, one of the largest suppliers of body scanning technology to fitness clubs. The company introduced the equipment at trade shows in 2015 after a pilot program with smaller gyms; in the 12 months since, year-over-year growth increased by 550 percent and it is now available in 350 locations in 25 countries around the world. It has been introduced most recently in Korea and the U.K. and will launch at select gyms in Brazil by early 2017.

Raj Sareen, Styku CEO

Source: Styku

The technology is familiar to anyone who's raised their hands overhead inside a scanner at an airport. It's built off the innovations found in the motion-sensing technology of Microsoft's Kinect, part of the Xbox One introduced in 2011. Using it is a straightforward process: Stand on a raised circular platform that makes one 360-degree rotation while an infrared camera in the nearby aluminum stand takes pictures and then relays the information to a connected laptop.

David Barton, the fitness guru who in September opened TMPL(pronounced "temple," as in your body is one), pairs the Styku with an InBody machine, which measures body fat, and an on-site nutritionist to create a diet around the findings. "The most efficient way to change the outside is to know what's inside," he said.

An Accidental Discovery

The technology was not initially designed for health clubs. Sareen got his start by hacking into webcams and turning them into body scanners, then really got into it when he saw the possibilities inherent in Microsoft's Kinect, which could create lifelike 3D scans of objects with its high-powered camera. In 2012, his proposal was one of only 11 accepted to Tech Stars, the respected accelerator program, and he came out of it with a business plan to market the technology to clothing retailers in order to create clothes that would be the right size every time. (In essence, the perfect virtual fitting room.)

The Styku body scanner in action.

Source: Styku

Sareen did a pilot program with Nordstrom while one of his competitors, Bodymetrics, partnered with Bloomingdale's in New York and Selfridge's in London. But the clothing industry is famously slow to adapt to technology, and it wasn't the right environment, anyway. Turns out that people were not ready for quite that level of reality while they were shopping. "We tried plastic surgeons, spas, dermatologists," said Sareen, but it wasn't until they went to health clubs that they found a receptive environment.

Even then, though, De Gorter was lukewarm the first time he saw it in action. "I thought seeing someone in 3d might be too revealing and too weird, but that notion was blown out of the water by everyone who tried it," he said. "Some people are a little reluctant to get on it, and some people don't like the results. But it becomes a great validator, a benchmark as they improve. Our early adopters saw the value in that right away."

Until now, the only way to get an accurate measurement of body fat was either through calipers—those small pliers that literally measure the amount of loose skin around your waist and arms—or via MRI, which is not a commercially viable proposition. But the Styku runs about $10,000 for a franchise operator, with no recurring fees at the moment. Fernwood Fitness's Williams says that the cost is a worthwhile investment, given the competitive advantage. "It's an added service," she said. "We do charge for it, but if someone's not motivated, we'll give them another scan at no charge to keep them." She also offers short-term 12-week challenges at her gyms, with Styku scans before and after "so they can see the difference," she said.

A Styku body scan readout.

Source: Styku

Beyond Fitness

It's not just health clubs. The Fairmont Scottsdale Princess and the Four Seasons Resort & Club Dallas at Las Colinas have introduced the Bod Pod, an egg-like scanning technology that measures muscle-to-fat ratio, so that their nutritionists can give recommendations while clients are traveling for business or just taking a few days off.

It may be available at the consumer level soon, as well. Farhad Farahbakhshian, CEO of Naked, is developing a version of the technology that works with your phone and can be set up at home. His background is in electrical engineering and computer science, but some work as a part-time Spin instructor gave him insight into what keeps people motivated.

He hopes to roll out a retail-friendly version of the product by November, and he is optimistic that people will adopt it. "The biggest challenge is just convincing people that it's real," he said. "They think it's something you see in Star Trek."