https://www.perplexity.ai/page/meta-ai-decodes-thoughts-into-DnLY1gk2Rl.a.EtfMhlUZQ

Meta AI researchers have achieved a significant breakthrough in decoding brain activity into text without invasive procedures, demonstrating the ability to reconstruct typed sentences from brain signals with up to 80% accuracy at the character level using advanced brain scanning techniques and artificial intelligence.

Meta's Brain-to-Text Technology

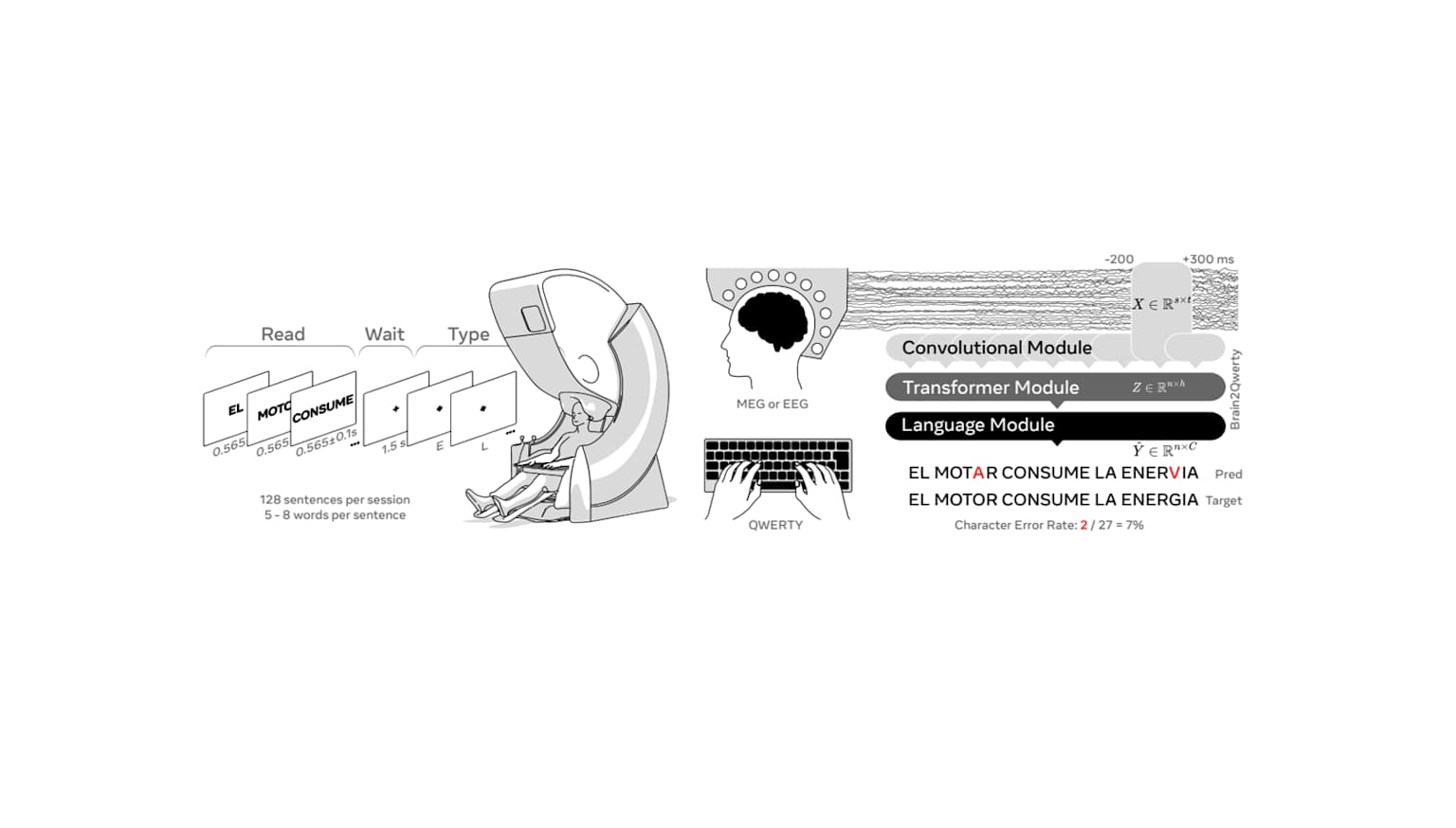

Collaborating with the Basque Center on Cognition, Brain and Language, Meta's research team has developed an AI model capable of transforming neural signals into text12. This groundbreaking system records brain activity while participants type sentences, then trains an artificial intelligence to decode these signals into written words3. The technology has demonstrated impressive results, achieving a character-error-rate of just 19% for the most successful participants when using magnetoencephalography (MEG) data13. This performance significantly surpasses previous methods based on electroencephalography (EEG), marking a substantial leap forward in non-invasive brain-computer interfaces45.

Non-Invasive Methods: MEG and EEG

Meta's brain-to-text technology primarily utilizes two non-invasive neuroimaging techniques: magnetoencephalography (MEG) and electroencephalography (EEG). MEG has shown superior performance, achieving a character-error-rate of just 19% compared to EEG's higher error rates12. While both methods offer real-time brain activity monitoring, MEG provides higher spatial resolution by detecting magnetic fields produced by neural electrical currents, whereas EEG measures electrical activity directly from the scalp34.

Key differences between MEG and EEG in this context include:

Accuracy: MEG outperforms EEG in decoding accuracy, with Meta's AI model predicting up to 80% of written characters using MEG data5.

Equipment: MEG requires a magnetically shielded room and more sophisticated machinery, while EEG is more portable and widely accessible6.

Signal quality: MEG signals are less distorted by skull and scalp, offering cleaner data for AI interpretation3.

Cost and availability: EEG is generally more cost-effective and widely available, making it more suitable for potential widespread application despite lower accuracy4.

Hierarchical Neural Dynamics

Meta's research has uncovered fascinating insights into the hierarchical nature of neural dynamics during language production. This breakthrough sheds light on how the brain processes and generates language, revealing a structured, layered sequence of neural activity. Key findings from the study include:

Identification of a 'dynamic neural code' linking successive thoughts1

Evidence of the brain processing language in a hierarchical manner2

Continuous holding of multiple layers of information during language production2

Seamless transition from abstract thoughts to structured sentences while maintaining coherence2

These discoveries provide a precise computational breakdown of the neural dynamics coordinating language production in the human brain1. The research suggests that the brain doesn't simply process one word at a time, but rather maintains a complex, multi-layered representation of information throughout the language production process2. This understanding could potentially inform the development of more sophisticated AI language models and enhance our ability to create brain-computer interfaces for communication assistance34.

Limitations and Future Applications

Meta's brain-to-text technology, while groundbreaking, faces several limitations and challenges. However, these hurdles also point to exciting future applications and areas for further research:

Current limitations:

Potential future applications:

As research progresses, we may see improvements in signal processing, AI decoding algorithms, and more portable neuroimaging technologies. These advancements could lead to practical, real-world applications of thought-to-text systems, potentially revolutionizing communication for those with disabilities and opening new frontiers in human-computer interaction48.